Prometheus 3.0 and beyond aka keeping it simple since 2012

I first started using Prometheus in 2016, and it’s been one of the best things to happen to me. I’ve been contributing to Prometheus since 2017, sometimes more and sometimes less. And the past few months, my contributions have been few and far between. However, I have been spending more and more time thinking about Prometheus and where it should head and how it can interplay with OpenTelemetry. This is me putting down a lot of the different thoughts I’ve been having.

Note: These are my personal thoughts, and not that of the Prometheus project. Any ideas proposed below are just those, ideas and proposals, not accepted consensus in the Prometheus ecosystem. Infact, I can see some of the ideas getting justifiable push back ;)

What is Prometheus?

Prometheus is not just limited to the server you run. It is made of several components:

Exposition format and client libraries

Prometheus has a simple text based exposition format that is used to expose and collect metrics from your applications. It looks like the following:

# HELP airgradient_info AirGradient device information

# TYPE airgradient_info info

airgradient_info{airgradient_serial_number="84fce6025620",airgradient_device_type="ONE_INDOOR",airgradient_library_version="3.0.6"} 1

# HELP airgradient_wifi_rssi_dbm WiFi signal strength from the AirGradient device perspective, in dBm

# TYPE airgradient_wifi_rssi_dbm gauge

# UNIT airgradient_wifi_rssi_dbm dbm

airgradient_wifi_rssi_dbm{} -25

# HELP airgradient_co2_ppm Carbon dioxide concentration as measured by the AirGradient S8 sensor, in parts per million

# TYPE airgradient_co2_ppm gauge

# UNIT airgradient_co2_ppm ppm

airgradient_co2_ppm{} 532

It also has language specific SDKs to help you instrument your applications. There are a few official ones, but if you include the community supported SDKs, then most of the popular languages have a Prometheus SDK.

PromQL and the data model

One of Prometheus’ main successes is the multidimensional data model. It is now everywhere and feels like it’s the obvious thing, but before Prometheus, the popular way to represent metrics was . limited hierarchical representation popular in StatsD and Graphite. Prometheus made the label based representation popular. It is powerful and flexible and turns out, a great fit for monitoring use cases.

PromQL is one of the best query languages to query time-series data. A lot more projects in the monitoring space are adding support for it because it’s just that good. GCP’s Cloud Monitoring is deprecating its own query language (MQL) in favor of PromQL, which is a great testimony!

The exporter ecosystem

The exporter ecosystem lets you collect metrics for almost every popular piece of software out there. Everytime I install a device or software, I first lookup if it has a Prometheus exporter and I almost always find one. This includes my 3D Printer and my Air Quality monitor.

This is one of the super powers of Prometheus and lets users of Prometheus get productive quickly.

The Prometheus Server & Alertmanager

This is what you run to collect, store, query and alert on the metrics data from applications and infrastructure. It’s simple to install (single, static binary) and run (./prometheus and it just works) with no external dependencies.

You cannot scale out horizontally, and it is by design. By not depending on network or consensus, it’s more stable and easier to operate. For redundancy and reliability, you just run multiple Prometheus servers. If one node is down, the other is still running and alerting on your metrics.

A large part of Prometheus’ popularity comes from this simplicity. You can get started in a few minutes, and most companies don’t have a monitoring load that requires them to scale out horizontally.

Hosted / Scalable Prometheus

And for those that do need to scale out, there is a vibrant ecosystem of projects and services out there. For the OSS self-hosted users, there is Mimir, Thanos, Cortex and VictoriaMetrics all of which are great backends that let you scale out Prometheus. If you would like a SaaS model, there are even more options out there with Grafana Cloud, Amazon Managed Prometheus, Google Managed Prometheus, Chronosphere, Last9, etc.

All of these solutions allow you to ingest metrics in the Prometheus formats and let you query and alert on them with PromQL.

I include these here because for a lot of newer users, their first experience with Prometheus is now a managed SaaS solution. This is what they associate with Prometheus and having these available has made Prometheus available to a wider range of users. I think these solutions are a core part of the Prometheus ecosystem.

OpenTelemetry & Prometheus

OpenTelemetry is another project in the space that is making remarkable progress in the standardization of telemetry data. It is a specification (and the implementation) for how to instrument and emit telemetry data. It also covers traces, logs and profiles in addition to metrics. It also has an exposition and a set of SDKs for instrumenting your applications.

OpenTelemetry has a more comprehensive instrumentation philosophy and also provides auto-instrumentation agents that make it easy to collect telemetry from applications. To monitor a Java service, you don’t actually need to change the code for the application, but run the application with a few additional flags pointing to the instrumentation agent.

java -javaagent:path/to/opentelemetry-javaagent.jar -Dotel.service.name=your-service-name -jar myapp.jar

OR

export JAVA_TOOL_OPTIONS="-javaagent:path/to/opentelemetry-javaagent.jar"

export OTEL_SERVICE_NAME="your-service-name"

java -jar myapp.jar

The application can now send traces, metrics and logs to the backends of your choice. This experience is quite compelling and before OpenTelemetry, to get this experience you needed to get locked into the ecosystem of a few vendors that offered it. It was also not something that Prometheus focused on. Prometheus always leaned towards explicit instrumentation and modifying your code.

I think OpenTelemetry is a massive win for developers and the observability ecosystem. It also doesn’t have a “backend” or storage and querying in its scope. I think Prometheus can be a great store for OTel metrics data. And with OTel as the instrumentation layer, and Prometheus as the query and alerting layer will allow developers to focus on getting work done .

However, how do the Prometheus exposition format and client SDKs fit in with the OpenTelemetry exposition format and SDKs? And is storage the only place the two communities can collaborate?

Simplicity is the selling point

The Prometheus exposition format and SDKs are far simpler than the OTel counterparts and there is a good reason for them to both co-exist. Tiny microcontrollers now have Prometheus servers built into them because the metrics format is super straightforward. The client SDKs explicitly do not do a lot of the things that OTel SDK can do, making them simpler to use and understand and in some cases, more performant. The pull mechanism means I can just curl a /metrics endpoint on a service to debug the pipeline.

Maybe it’s pure familiarity or bias, but I prefer the text format over the proto or JSON format from OTel. And I prefer the simple ergonomics of the Prometheus SDKs over OTel’s SDKs. And I have a feeling that there will be enough people out there that feel the same and it is a good enough reason to continue developing the Prometheus SDKs tbh.

Complexity is inevitable?

The OTel SDKs and format are more complicated for a reason. They cater to a lot more use cases and for the widespread adoption that OpenTelemetry is looking for, it is inevitable that it has to address the edge cases explicitly. And it is doing a great job at that!

And sometimes this complexity is inevitable even for Prometheus. For example, for supporting sparse exponential histograms (native histograms), Prometheus is adopting the protobuf format as a first class format again. The text based format is not going anywhere, but it is also not suitable for representing native histograms. You can still use the text format if you don’t want native histograms.

Does that mean that eventually Prometheus will be just as complex as OpenTelemetry? I hope not, Prometheus has historically said no to a lot of niche use cases and that helped keep it simple. I hope we can continue to do the same.

Collaboration is key

Finally, this doesn’t mean that the two projects are incompatible, if anything, this provides a lot of opportunities to learn from each other. Prometheus is evolving to cater to OpenTelemetry use cases, for example, by enabling PUSH based ingestion and even looking into adding support for delta metrics.

People can use OpenTelemetry auto-instrumentation for most metrics, but use the Prometheus SDK for their custom metrics. This is already possible in Java and Golang. I would love to see a simpler exposition format in OpenTelemetry for embedded and constrained resource use cases.

Another thing that the two communities can collaborate on is the exporter ecosystem. OpenTelemetry is creating an alternative ecosystem for monitoring infrastructure with its receivers in the OpenTelemetry Collector. I firmly believe that we can accelerate both ecosystems by coming together in this initiative.

Thinking beyond 3.0

Prometheus 3.0 is almost here (initial launch at PromCon 2024) and I have been wondering what I would like to see in the next few years in Prometheus.

Prometheus is feature-complete?

Prometheus is simple because it chose a narrow scope. People wanted a simple pull based monitoring and alerting system, and that requirement is more or less fulfilled with the current version of Prometheus. It works extremely well for the monitoring use case. So, is Prometheus feature-complete? Well, if you pick a sufficiently narrow scope, then yes, it’s feature complete.

It is inevitable that as the project gets new contributors, they will come with new ideas, and the scope of the project evolves. We are on the verge of adding delta + push based ingestion to Prometheus as a first-class feature. This is such a wide departure from the origins of Prometheus. I think this is great, and I am looking forward to adding more people into the team and seeing where they take the project.

However, I would like the project to keep its simplicity and just works attitude as it evolves.

Thinking beyond the server

PromQL is surprisingly widely adopted. There are even more projects that are looking to adopt it (for ex. Clickhouse). However, PromQL has been tied to the usage in Prometheus server for a long time. Should we have a PromQL working group with representatives from various projects to understand where they’d like to evolve the language?

Is it time to decouple the language from the server? I feel like it’s a great language and can have a larger scope while the server keeps its focus on monitoring.

Make metrics and alerting accessible to all

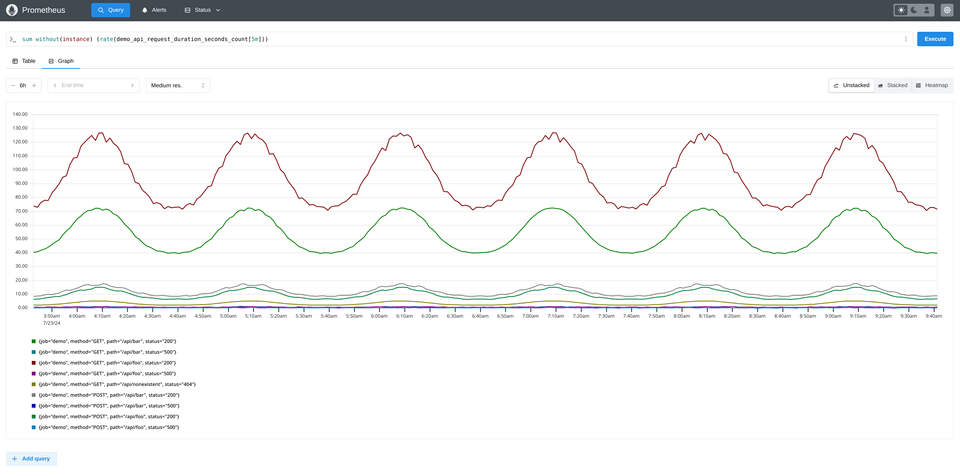

PromQL is great! However, a “query-first” UX is intimidating for a lot of users. We need to make data exploration easier for non-technical users. Grafana’s explore metrics is a good first step but I’d like it to come to the Prometheus UI. I use Github Copilot A LOT. It’s insanely good for Golang. I want to see something for Prometheus as well, helping users not very proficient in PromQL get their job done as well.

We need to make creating and managing alerts easier. A lot of users prefer a UI to create and manage alerts. We have historically said no to this for good reasons, and those reasons still hold. However, I would like a project in the ecosystem that manages alert files on disk and has a UI to create and view alerts. It could also be configured via a fleet management system like OpAmp.

High Cardinality?

High Cardinality support is interesting, and by high-cardinality, I mean having 100K+ values in your labels. Like userID or similar. You can do it today, if you are careful, but it’s not something that Prometheus is built for. It is useful for troubleshooting but not easy or trivial to implement correctly. I’d like Prometheus to support it, however not if it makes operating Prometheus complicated or introduces dependencies on external systems.

I would very much like to see ecosystem projects like Mimir, Thanos, etc. to solve this problem, and help evolve PromQL as required. Clickhouse is also a great candidate for it.

Goutham & Prometheus in 10 years

It’s been more than 9 years since I first came across Prometheus. I have a feeling I’ll be around Prometheus for 10 more years. But I would like to use it differently in the next decade.

I want it everywhere. I want it to become a consumer product. For example, I’d like my friends to be able to visualize their health data in Prometheus, alongside their thermostat data, alongside their bank data. Everything natively integrates with Prometheus, and people just have one running either locally or in the cloud.

Maybe Prometheus is not the right solution for this, but hey, this is the world I’d like to live in and help build, and Prometheus is the system I know 🙂And this means Prometheus needs to keep its simplicity so even non-technical people can run it for personal use.

Member discussion